Researchers hope the concept of "expansion entropy" will become a simple, go-to tool to identify (sometimes hidden) chaos in a wide range of model systems

From the Journal: Chaos

WASHINGTON, D.C., July 28, 2015 — Does the flap of a butterfly’s wings in Brazil set off a tornado in Texas? This intriguing question — the title of a talk given by MIT meteorologist Edward Lorenz at a 1972 meeting — has come to embody the popular conception of a chaotic system, one in which a small difference in initial conditions will cascade toward a vastly different outcome in the future.

Mathematically, extreme sensitivity to initial conditions can be represented by a quantity called a Lyapunov exponent, which is positive if two infinitesimally close starting points diverge exponentially as time progresses. Yet Lyapunov exponents as a definition of chaos have limitations — they only test for chaos in particular solutions of a model, not in the model itself, and they can be positive, for example in simple scenarios of unlimited growth, even when the underlying model is considered too straightforward to be deemed chaotic.

Now researchers from the University of Maryland have come up with a new definition of chaos that applies more broadly than Lyapunov exponents and other previous definitions of chaos. The new definition fits on a few lines, can be easily approximated by numerical methods, and works for a wide variety of chaotic systems. The researchers present the definition in a paper in the 25th anniversary issue of the journal Chaos, from AIP Publishing.

Hunting Down Hidden Chaos

Edward Lorenz, the scientist whose work gave rise to the term “the butterfly effect,” first noticed chaotic characteristics in weather models. In 1963 he published a set of differential equations to describe atmospheric airflow and noted that tiny variations in initial conditions could drastically alter the solution to the equations over time, making it difficult to predict the weather in the long-term.

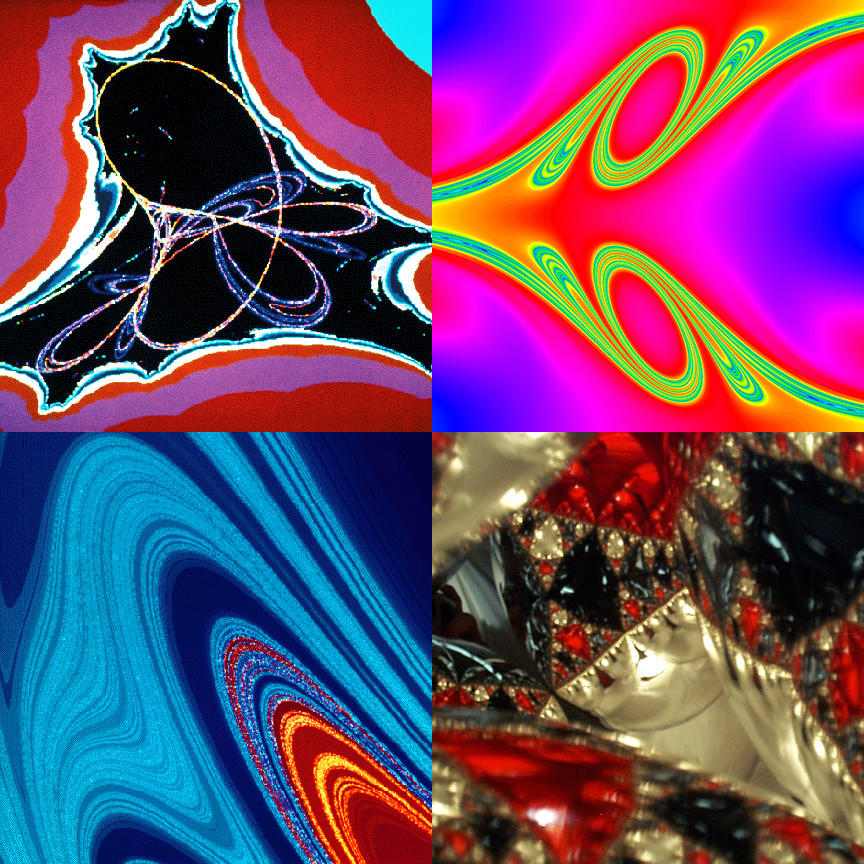

The chaotic solution to Lorenz’s equations looks, fittingly, like two wings of a butterfly. The shape can be categorized, in math-speak, as an attractor, meaning it is easy to identify with Lyapunov exponents, said Brian Hunt, a mathematician at the University of Maryland and a member of the university’s “Chaos Group.” Yet not all chaotic behavior is quite so clear, he said.

As an example Hunt describes four Christmas balls stacked in a pyramid, a set-up analyzed by Hunt’s colleagues David Sweet, Edward Ott, James Yorke, and others at the University of Maryland. Light hitting the shiny spheres reflects off in all directions. Most of the light travels simple paths, but occasional photons can become trapped in the interior of the pyramid, bouncing chaotically back and forth off the ornaments. The chaotic, one-off light paths are mathematically categorized as repellers, and can be difficult to find from model equations unless you know exactly where to look.

“Our definition of chaos identifies chaotic behavior even when it lurks in the dark corners of a model,” said Hunt, who collaborated on the paper with Edward Ott, a professor at the University of Maryland and the author of the graduate textbook “Chaos in Dynamical Systems.” The two researchers also broadened the definition by including systems that are forced, meaning that external factors continue to push or pull on the model as it evolves.

Researchers commonly encounter chaotic repellers, found in physical systems such as water flowing through a pipe, asteroid orbits, and chemical reactions, and forced systems, found for example in bird flocks, geophysics and the way the body controls the heartbeat.

Calculating Uncertainty

To fit the generally recognized forms of chaos under one umbrella definition, Hunt and Ott turned to a concept called entropy. In a system that changes over time, entropy represents the rate at which disorder and uncertainty build up.

The idea that entropy could be a proxy for chaos is not new, but the standard definitions of entropy, such as metric entropy and topological entropy, are trapped in the mathematical equivalent of a straightjacket. The definitions are difficult to apply computationally, and have stringent prerequisites that disqualify many physical and biological systems of interest to scientists.

Hunt and Ott defined a new type of flexible entropy, called expansion entropy, which can be applied to more realistic models of the world. The definition can be approximated accurately by a computer and can accommodate systems, like regional weather models, that are forced by

potentially chaotic inputs. The researchers define chaotic models as ones that exhibit positive expansion entropy.

The researchers hope expansion entropy will become a simple, go-to tool to identify chaos in a wide range of model systems. Pinpointing chaos in a system can be a first step to determining whether the system can ultimately be controlled.

For example, Hunt explains, two identical chaotic systems with different initial conditions may evolve completely differently, but if the systems are forced by external inputs they may start to synchronize. By applying the expansion entropy definition of chaos and characterizing whether the original systems respond chaotically to inputs, researchers can tell whether they can wrestle some control over the chaos through inputs to the system. Secure communications systems and pacemakers for the heart would be just two of the potential applications of this type of control, Hunt said.

###

For More Information:

Jason Socrates Bardi

jbardi@aip.org

240-535-4954

@jasonbardi

Article Title

Authors

Brian R. Hunt and Edward Ott

Author Affiliations

University of Maryland