Quantum dots infuse a machine vision sensor with superhuman adaptation speed.

From the Journal: Applied Physics Letters

WASHINGTON, July 1, 2025 — In blinding bright light or pitch-black dark, our eyes can adjust to extreme lighting conditions within a few minutes. The human vision system, including the eyes, neurons, and brain, can also learn and memorize settings to adapt faster the next time we encounter similar lighting challenges.

In an article published this week in Applied Physics Letters, by AIP Publishing, researchers at Fuzhou University in China created a machine vision sensor that uses quantum dots to adapt to extreme changes in light far faster than the human eye can — in about 40 seconds — by mimicking eyes’ key behaviors. Their results could be a game changer for robotic vision and autonomous vehicle safety.

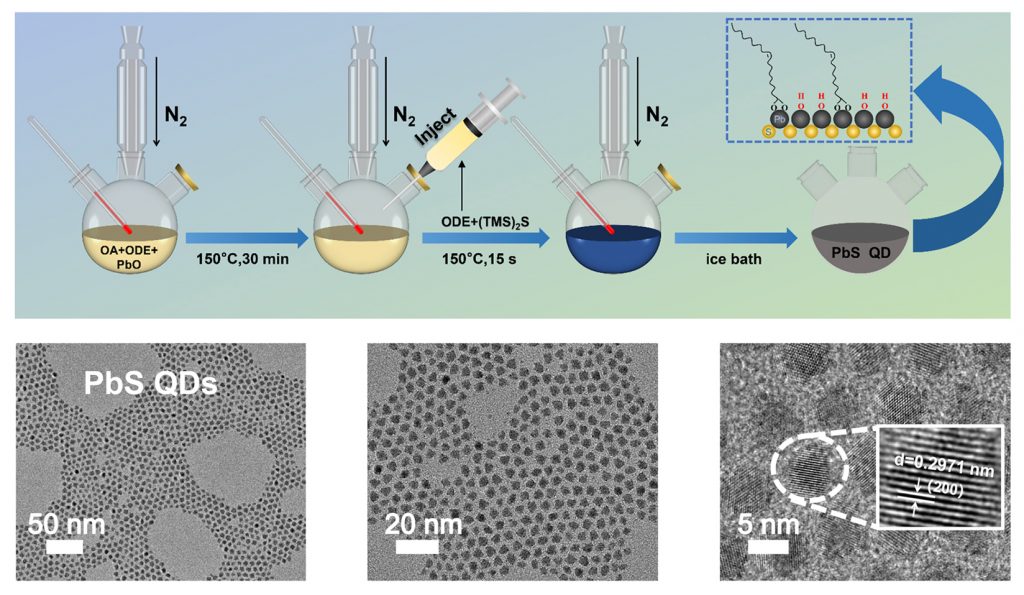

“Quantum dots are nano-sized semiconductors that efficiently convert light to electrical signals,” said author Yun Ye. “Our innovation lies in engineering quantum dots to intentionally trap charges like water in a sponge then release them when needed — similar to how eyes store light-sensitive pigments for dark conditions.”

The sensor’s fast adaptive speed stems from its unique design: lead sulfide quantum dots embedded in polymer and zinc oxide layers. The device responds dynamically by either trapping or releasing electric charges depending on the lighting, similar to how eyes store energy for adapting to darkness. The layered design, together with specialized electrodes, proved highly effective in replicating human vision and optimizing its light responses for the best performance.

“The combination of quantum dots, which are light-sensitive nanomaterials, and bio-inspired device structures allowed us to bridge neuroscience and engineering,” Ye said.

Not only is their device design effective at dynamically adapting for bright and dim lighting, but it also outperforms existing machine vision systems by reducing the large amount of redundant data generated by current vision systems.

“Conventional systems process visual data indiscriminately, including irrelevant details, which wastes power and slows computation,” Ye said. “Our sensor filters data at the source, similar to the way our eyes focus on key objects, and our device preprocesses light information to reduce the computational burden, just like the human retina.”

In the future, the research group plans to further enhance their device with systems involving larger sensor arrays and edge-AI chips, which perform AI data processing directly on the sensor, or using other smart devices in smart cars for further applicability in autonomous driving.

“Immediate uses for our device are in autonomous vehicles and robots operating in changing light conditions like going from tunnels to sunlight, but it could potentially inspire future low-power vision systems,” Ye said. “Its core value is enabling machines to see reliably where current vision sensors fail.”

###

Article Title

A back-to-back structured bionic visual sensor for adaptive perception

Authors

Xing Lin, Zexi Lin, Wenxiao Zhao, Sheng Xu, Enguo Chen, Tailiang Guo, and Yun Ye

Author Affiliations

Fuzhou University, Fujian Science and Technology Innovation Laboratory for Optoelectronic Information of China