From the Journal: The Journal of the Acoustical Society of America

WASHINGTON, August 3, 2021 — When we speak, although we may not be aware of it, we use our auditory and somatosensory systems to monitor the results of the movements of our tongue or lips.

This sensory information plays a critical role in how we learn to speak and maintain accuracy in these behaviors throughout our lives. Since we cannot typically see our own faces and tongues while we speak, however, the potential role of visual feedback has remained less clear.

In the Journal of the Acoustical Society of America, University of Montreal and McGill University researchers present a study exploring how readily speakers will integrate visual information about their tongue movements — captured in real time via ultrasound — during a speech motor learning task.

“Participants in our study, all typical speakers of Quebec French, wore a custom-molded prosthesis in their mouths to change the shape of their hard palate, just behind their upper teeth, to disrupt their ability to pronounce the sound ‘s’ — in effect causing a temporary speech disorder related to this sound,” said Douglas Shiller, an associate professor at the University of Montreal.

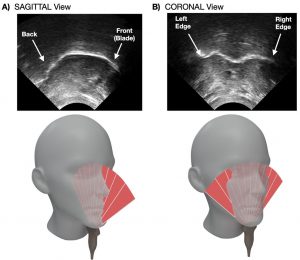

One group received visual feedback of their tongue with a sensor under their chin oriented to provide an ultrasound image within the sagittal plane (a slice down the midline, front to back).

A second group also received visual feedback of their tongue. In this case, the sensor was oriented at 90 degrees to the previous condition with an image of the tongue within the coronal plane (across the tongue from left to right).

A third group, the control group, received no visual feedback of their tongue.

All participants were given the opportunity to practice the “s” sound with the prosthesis in place for 20 minutes.

“We compared the acoustic properties of the ‘s’ across the three groups to determine to what degree visual feedback enhanced the ability to adapt tongue movements to the perturbing effects of the prosthesis,” said Shiller.

As expected, participants in the coronal visual feedback group improved their “s” production to a greater degree than those receiving no visual feedback.

“We were surprised, however, to find participants in the sagittal visual feedback group performed even worse than the control group that received no visual feedback,” said Shiller. “In other words, visual feedback of the tongue was found to either enhance motor learning or interfere with it, depending on the nature of the images being presented.”

The group’s findings broadly support the idea that ultrasound tools can improve speech learning outcomes when used, for example, in the treatment of speech disorders or learning new sounds in a second language.

“But care must be taken in precisely how visual information is selected and presented to a patient,” said Shiller. “Visual feedback that isn’t compatible with the demands of the speaking task may interfere with the person’s natural mechanisms of speech learning — potentially leading to worse outcomes than no visual feedback at all.”

###

For more information:

Larry Frum

media@aip.org

301-209-3090

Article Title

Authors

Guillaume Barbier, Ryme Merzouki, Mathilde Bal, Shari R. Baum, and Douglas M. Shiller

Author Affiliations

University of Montreal and McGill University