From the Journal: Applied Physics Letters

WASHINGTON, D.C., May 28, 2019 — Video cameras continue to gain widespread use to monitor human activities for surveillance, health care, home use and more, but there are privacy and environmental limitations in how well they work. Acoustical waves, such as sounds and other forms of vibrations, are an alternative medium that may bypass those limitations.

Unlike electromagnetic waves, such as those used in radar, acoustical waves can be used not only to find objects but also to identify them. As described in a new paper in the May 28 issue of Applied Physics Letters, from AIP Publishing, the researchers used a two-dimensional acoustic array and convolutional neural networks to detect and analyze the sounds of human activity and identify those activities.

“If the identification accuracy is high enough, a large number of applications could be implemented,” said Xinhua Guo, associate professor at Wuhan University of Technology. “For example, a medical alarm system could be activated if a person falls at home and it is detected. Thus, immediate help could be provided and with little privacy leaked at the same time.”

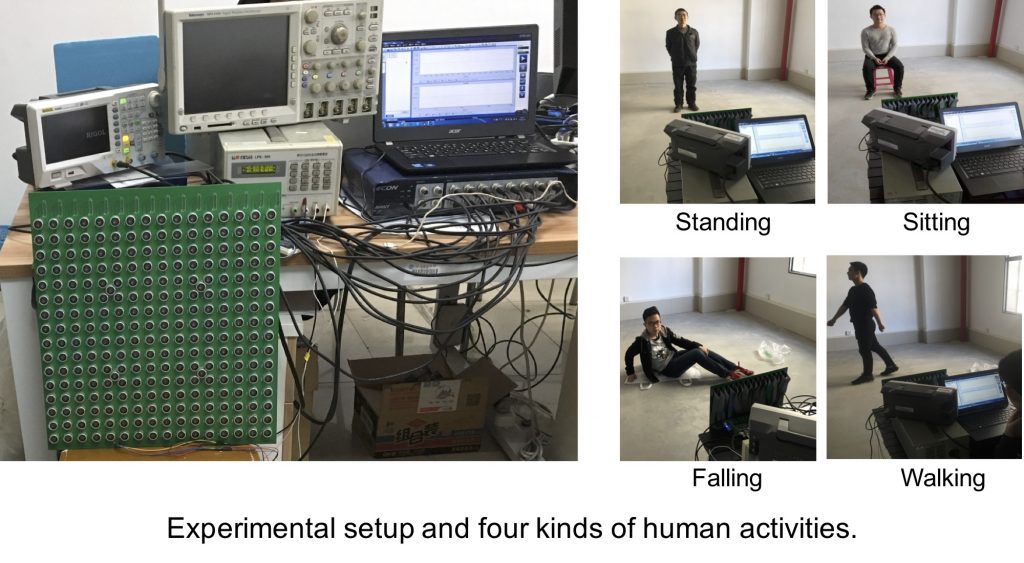

By using a two-dimensional acoustic array with 256 receivers and four ultrasonic transmitters, the researchers were able to gather data related to four different human activities — sitting, standing, walking and falling. They used a 40-kilohertz signal to bypass any potential contamination from ordinary room noise and distinguish it from the identifying sounds.

CREDIT: Xinhua Guo

Their tests achieved an overall accuracy of 97.5% for time-domain data and 100% for frequency-domain data. The scientists also tested arrays with fewer receivers (eight and four) and found them to produce results with lower accuracy of the human activity.

Guo said acoustic systems are a better detection device than vision-based systems because of the lack of widespread acceptance of cameras due to privacy issues. In addition, low lighting or smoke can also hamper vision recognition, but sound waves are not affected by those special environmental situations.

“In future, we will go on studying complex activity and situation of random positioning,” Guo said. “As we know, human activities are complicated, taking falling as an example, and can present in various postures. We are hoping to collect more datasets of falling activity to reach higher accuracy.”

Guo said they will be experimenting with various numbers of sensors and their effectiveness in detecting and determining human activities. He said there is an optimal number for the array that would make this viable for commercial and personal use in homes and buildings.

###

For more information:

Larry Frum

media@aip.org

301-209-3090

Article Title

A single feature for human activity recognition using two-dimensional acoustic array

Authors

Xinhua Guo, Rongcheng Su, Chaoyue Hu, Xiaodong Ye, Huachun Wu and Kentaro Nakamura

Author Affiliations

Wuhan University of Technology, Tokyo Institute of Technology